The rise of generative artificial intelligence (AI) tools like ChatGPT, Google Bard, and Jasper Chat raises many questions about the ways we teach and the ways students learn. While some of these questions concern how we can use AI to accomplish learning goals and whether or not that is advisable, others relate to how we can facilitate critical analysis of AI itself.

The wide variety of questions about AI and the rapidly changing landscape of available tools can make it hard for educators to know where to start when designing an assignment. When confronted with new technologies—and the new teaching challenges they present—we can often turn to existing evidence-based practices for the guidance we seek.

This guide will apply the Transparency in Learning and Teaching (TILT) framework to "un-complicate" planning an assignment that uses AI, providing guiding questions for you to consider along the way.

The result should be an assignment that supports you and your students to approach the use of AI in a more thoughtful, productive, and ethical manner.

Plan your assignment.

The TILT framework offers a straightforward approach to assignment design that has been shown to improve academic confidence and success, sense of belonging, and metacognitive awareness by making the learning process clear to students (Winkelmes et al., 2016). The TILT process centers around deciding—and then communicating—three key components of your assignment: 1) purpose, 2) tasks, and 3) criteria for success.

Step 1: Define your purpose.

To make effective use of any new technology, it is important to reflect on our reasons for incorporating it into our courses. In the first step of TILT, we think about what we want students to gain from an assignment and how we will communicate that purpose to students.

The SAMR model, a useful tool for thinking about educational technology use in our courses, lays out four tiers of technology integration. The tiers, roughly in order of their sophistication and transformative power, are Substitution, Augmentation, Modification, and Redefinition. Each tier may suggest different approaches to consider when integrating AI into teaching and learning activities.

Questions to consider:

- Do you intend to use AI as a substitution, augmentation, modification, or redefinition of an existing teaching practice or educational technology?

- What are your learning goals and expected learning outcomes?

- Do you want students to understand the limitations of AI or to experience its applications in the field?

- Do you want students to reflect on the ethical implications of AI use?

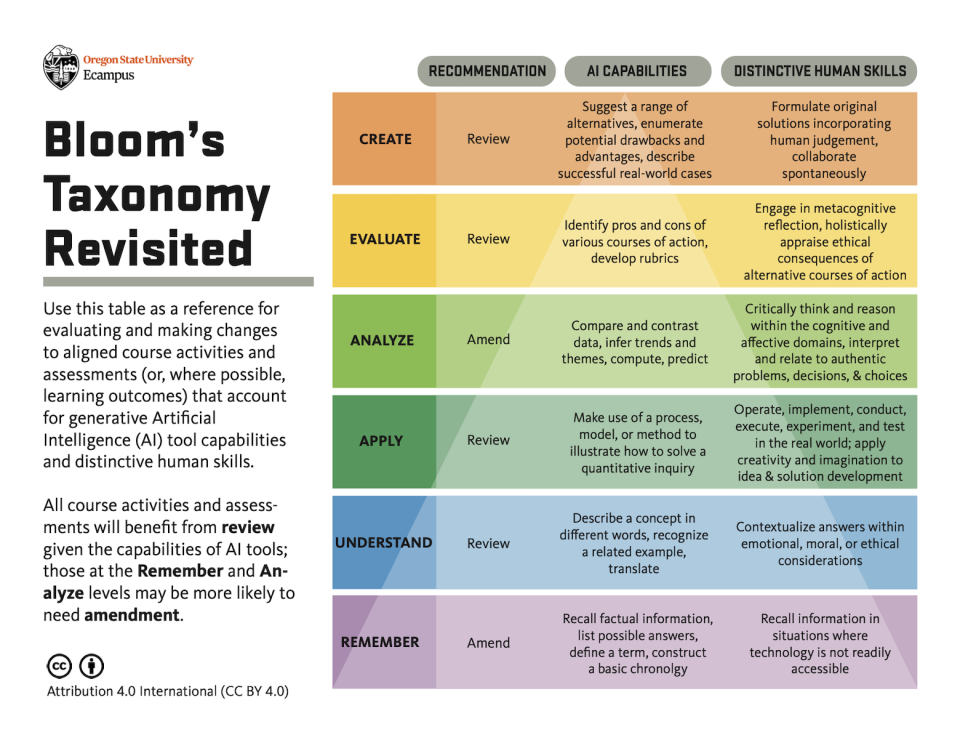

Bloom’s Taxonomy is another useful tool for defining your assignment’s purpose and your learning goals and outcomes.

This downloadable Bloom’s Taxonomy Revisited resource, created by Oregon State University, highlights the differences between AI capabilities and distinctive human skills at each Bloom's level, indicating the types of assignments you should review or change in light of AI. Bloom's Taxonomy Revisited is licensed under Creative Commons Attribution 4.0 International (CC BY 4.0).

Access a transcript of the graphic.

Step 2: Define the tasks involved.

In the next step of TILT, you list the steps students will take when completing the assignment. In what order should they do specific tasks, what do they need to be aware of to perform each task well, and what mistakes should they avoid? Outlining each step is especially important if you’re asking students to use generative AI in a limited manner. For example, if you want them to begin with generative AI but then revise, refine, or expand upon its output, make clear which steps should involve their own thinking and work as opposed to AI’s thinking and work.

Questions to consider:

- Are you designing this assignment as a single, one-time task or as a longitudinal task that builds over time or across curricular and co-curricular contexts? For longitudinal tasks consider the experiential learning cycle (Kolb, 1984). In Kolb’s cycle, learners have a concrete experience followed by reflective observation, abstract conceptualization, and active experimentation. For example, students could record their generative AI prompts, the results, a reflection on the results, and the next prompt they used to get improved output. In subsequent tasks students could expand upon or revise the AI output into a final product. Requiring students to provide a record of their reflections, prompts, and results can create an “AI audit trail,” making the task and learning more transparent.

- What resources and tools are permitted or required for students to complete the tasks involved with the assignment? Make clear which steps should involve their own thinking (versus AI-generated output, for example), required course materials, and if references are required. Include any ancillary resources students will need to accomplish tasks, such as guidelines on how to cite AI, in APA 7.0 for example.

- How will you offer students flexibility and choice? As of this time, many generative AI tools have not been approved for use by Ohio State, meaning they have not been vetted for security, privacy, or accessibility issues. It is known that many platforms are not compatible with screen readers, and there are outstanding questions as to what these tools do with user data. Students may have understandable apprehensions about using these tools or encounter barriers to doing so successfully. So while there may be value in giving students first-hand experience with using AI, it’s important to give them the choice to opt out. As you outline your assignment tasks, plan how to provide alternative options to complete them. For example, could you provide AI output you’ve generated for students to work with, demonstrate use of the tool during class, or allow use of another tool that enables students to meet the same learning outcomes?

To support the university-wide AI Fluency initiative, Ohio State has reviewed and vetted a number of standalone generative AI applications for use in the classroom. Among the tools currently vetted are Microsoft Copilot, Adobe Firefly, and Google Gemini. The university is committed to ensuring our use of these tools meets security standards while supporting productivity and efficiency. Keep in mind that data you enter in AI applications using a personal account will not be protected; you must log in to an approved AI tool with your Ohio State credentials (lastname.#@osu.edu and password) before entering any institutional data above the S1 classification.

See Additional Tools for more AI applications that can support teaching and learning, or explore all Approved AI Tools.

- What are your expectations for academic integrity? This is a helpful step for clarifying your academic integrity guidelines for this assignment, around AI use specifically as well as for other resources and tools. The standard Academic Integrity Icons in the table below can help you call out what is permissible and what is prohibited. If any steps for completing the assignment require (or expressly prohibit) AI tools, be as clear as possible in highlighting which ones, as well as why and how AI use is (or is not) permitted.

| Permitted | Not permitted | Potential Rationale |

|---|---|---|

|

| Getting help on the assignment [is] [is not] permitted. |

|

| Collaborating, or completing the assignment with others, [is] [is not] permitted. |

|

| Copying or reusing previous work [is] [is not] permitted. |

|

| Open-book research for the assignment [is] [is not] permitted and encouraged. |

|

| Working from home on the assignment [is] [is not] permitted. |

|

| Use of generative artificial intelligence for the assignment [is] [is not] permitted. |

Step 3: Define criteria for success.

An important feature of transparent assignments is that they make clear to students how their work will be evaluated. During this TILT step, you will define criteria for a successful submission—consider creating a rubric to clarify these expectations for students and simplify your grading process. If you intend to use AI as a substitute or augmentation for another technology, you might be able to use an existing rubric with little or no change. However, if AI use is modifying or redefining the assignment tasks, a new grading rubric will likely be needed.

Questions to consider:

- How will you grade this assignment? What key criteria will you assess?

- What indicators will show each criterion has been met?

- What qualities distinguish a successful submission from one that needs improvement?

- Will you grade students on the product only or on aspects of the process as well? For example, if you have included a reflection task as part of the assignment, you might include that as a component of the final grade.

Alongside your rubric, it is helpful to prepare examples of successful (and even unsuccessful) submissions to provide more tangible guidance to students. In addition to samples of the final product, you could share examples of effective AI prompts, reflections tasks, and AI citations. Examples may be drawn from previous student work or models that you have mocked up, and they can be annotated to highlight notable elements related to assignment criteria.

Present and discuss your assignment.

As clear as we strive to be in our assignment planning and prompts, there may be gaps or confusing elements we have overlooked. Explicitly going over your assignment instructions—including the purpose, key tasks, and criteria—will ensure students are equipped with the background and knowledge they need to perform well. These discussions also offer space for students to ask questions and air unanticipated concerns, which is particularly important given the potential hesitance some may have around using AI tools.

Questions to consider:

- How will this assignment help students learn key course content, contribute to the development of important skills such as critical thinking, or support them to meet your learning goals and outcomes?

- How might students apply the knowledge and skills acquired in their future coursework or careers?

- In what ways will the assignment further students’ understanding and experience around generative AI tools, and why does that matter?

- What questions or barriers do you anticipate students might encounter when using AI for this assignment?

As noted above, many students are unaware of the accessibility, security, privacy, and copyright concerns associated with AI, or of other pitfalls they might encounter working with AI tools. Openly discussing AI’s limitations and the inaccuracies and biases it can create and replicate will support students to anticipate barriers to success on the assignment, increase their digital literacy, and make them more informed and discerning users of technology.

Examples

Using the Transparent Assignment Template

Sample Assignment: AI-Generated Lesson Plan

Summary

In many respects, the rise of generative AI has reinforced existing best practices for assignment design—craft a clear and detailed assignment prompt, articulate academic integrity expectations, increase engagement and motivation through authentic and inclusive assessments. But AI has also encouraged us to think differently about how we approach the tasks we ask students to undertake, and how we can better support them through that process. While it can feel daunting to re-envision or reformat our assignments, AI presents us with opportunities to cultivate the types of learning and growth we value, to help students see that value, and to grow their critical thinking and digital literacy skills.

Using the Transparency in Learning and Teaching (TILT) framework to plan assignments that involve generative AI can help you clarify expectations for students and take a more intentional, productive, and ethical approach to AI use in your course.

- Step 1: Define your purpose. Think about what you want students to gain from this assignment. What are your learning goals and outcomes? Do you want students to understand the limitations of AI, see its applications in your field, or reflect on its ethical implications? The SAMR model and Bloom's Taxonomy are useful references when defining your purpose for using (or not using) AI on an assignment.

- Step 2: Define the tasks involved. List the steps students will take to complete the assignment. What resources and tools will they need? How will students reflect upon their learning as they proceed through each task? What are your expectations for academic integrity?

- Step 3: Define criteria for success. Make clear to students your expectations for success on the assignment. Create a rubric to call out key criteria and simplify your grading process. Will you grade the product only, or parts of the process as well? What qualities indicate an effective submission? Consider sharing tangible models or examples of assignment submissions.

Finally, it is time to make your assignment guidelines and expectations transparent to students. Walk through the instructions explicitly—including the purpose, key tasks, and criteria—to ensure they are prepared to perform well.

References

Winkelmes, M. (2013). Transparency in Teaching: Faculty Share Data and Improve Students’ Learning. Liberal Education 99(2).

Wilkelmes, M. (2013). Transparent Assignment Design Template for Teachers. TiLT Higher Ed: Transparency in Learning and Teaching. https://bdl2jezatgadvbda.public.blob.vercel-storage.com/pdf/Transparent%20Assignment%20Templates-2LhjMK8n0IxS1retxNyiAj7P1qejRd.pdf

Winkelmes, M., Bernacki, M., Butler, J., Zochowski, M., Golanics, J., Weavil, K. (2016). A Teaching Intervention that Increases Underserved College Students’ Success. Peer Review.